How to access Google Gemini 3 Right Now

Google unveiled Gemini 3 on November 18, 2025, boldly proclaiming it as its “most intelligent” AI model to date. While Gemini 3 hasn’t rolled out yet on the official Gemini app on Android o iOS, here is how to access Gemini 3 immediately.

Google makes a bold claim: Gemini 3 is the world’s best model for multimodal understanding. Whether you’re uploading homework photos for extra help, transcribing missed lecture notes, analyzing complex visual data, or processing video content, Gemini 3 handles it with unprecedented accuracy and insight. The AI seamlessly understands context across text, images, audio, and video.

Each generation of Gemini has built upon its predecessor’s foundation, creating an increasingly sophisticated AI ecosystem. The Gemini 1 introduced groundbreaking native multimodality and dramatically expanded context windows, allowing the model to process vast amounts of diverse information simultaneously. This was the foundation—the ability to see and understand multiple types of data at once.

Gemini 2 focused on establishing agentic capabilities and advancing reasoning. The 2.5 Pro version topped the LMArena Leaderboard for over six months, demonstrating sustained excellence in real-world performance. This generation taught AI to think more deeply and tackle complex, multi-step problems.

The latest Gemini 3 synthesizes all these capabilities into what Google describes as state-of-the-art reasoning. But the real breakthrough isn’t just about processing power—it’s about understanding context and intent. As Sundar Pichai noted, Gemini 3 has evolved from simply reading text and images to “reading the room,” grasping the subtle nuances and underlying meaning behind user requests.

Benchmark Performance: Approaching AGI

According to Google, technical specifications of Gemini 3 Pro read like a roadmap toward artificial general intelligence.

Academic and Reasoning Capabilities

Gemini 3 Pro demonstrates PhD-level proficiency across complex academic disciplines:

- LMArena Leaderboard: Achieved 1501 Elo rating, cementing its position as the top frontier model

- Humanity’s Last Exam (HLE): Scored 37.5% without tools, showcasing intrinsic problem-solving abilities

- GPQA Diamond: An exceptional 91.9% score, indicating mastery of expert-level questions

- MathArena Apex: Set a new state-of-the-art at 23.4% for advanced mathematical reasoning

Multimodal Mastery

Where Gemini 3 truly distinguishes itself is in cross-modal understanding:

- MMMU-Pro (Multimodal): 81% accuracy

- Video-MMMU: An impressive 87.6% score, demonstrating deep analysis of dynamic video content

- SimpleQA Verified: 72.1% for factual accuracy

This demonstrates a model capable of understanding and reasoning across text, images, audio, and video with unprecedented reliability.

Deep Think comes to Gemini

Perhaps one of the most intriguing additions is Gemini 3 Deep Think mode. Deep Think mode promises step-change improvements in reasoning and multimodal understanding. Initially rolling out to testers before reaching Google AI Ultra subscribers, this feature acknowledges that complex problems deserve thorough consideration. It’s the difference between a quick answer and a thoughtful solution.

Redesigned Gemini App

Google hasn’t just upgraded the AI model; they’ve completely redesigned the app. The new Gemini app interface prioritizes usability, discovery, and organization.

Streamlined Chat Initiation: Starting new conversations is now intuitive and frictionless, addressing a common pain point from earlier versions.

My Stuff Folder: Finally, a solution to the perennial problem of finding previously generated content. All images, videos, and reports are now organized in one accessible location.

Shopping Integration: The app now incorporates product listings, comparison tables, and real-time pricing from Google’s Shopping Graph (indexing over 50 billion products). Gemini transforms from information provider to comprehensive shopping assistant.

Generative Interfaces: This is where Gemini 3 truly breaks new ground. Instead of static text responses, the model creates custom interfaces dynamically tailored to each prompt.

Visual Layout: Magazine-Style Immersion

The Visual Layout feature generates immersive, magazine-style presentations complete with photos, interactive modules, and customization options. Ask Gemini to “plan a 3-day trip to Rome next summer,” and you don’t get a bulleted list—you receive a visual itinerary with destination images, clickable maps, suggested activities, and refinement tools.

It’s the difference between reading about something and experiencing it.

Dynamic View: Real-Time Custom Coding

Dynamic View leverages Gemini 3’s agentic coding capabilities to design and code completely custom user interfaces in real-time. Request an explanation of “the Van Gogh Gallery with life context for each piece,” and you’ll receive an interactive, scrollable timeline where you can tap individual artworks and explore Van Gogh’s life events alongside his creative output.

This transforms passive information consumption into active exploration and discovery—a fundamental reimagining of human-AI interaction.

Vibe Coding

For developers and creators, Gemini 3 introduces what Google calls “vibe coding.” This isn’t just about generating code—it’s about understanding the underlying intent and context behind what you want to create.

When building applications in Canvas, users report that apps are more sophisticated and full-featured than ever before. The model doesn’t just execute commands; it grasps the vision behind your request and brings it to life with appropriate complexity and polish.

Gemini Agent Replacing Digital Assistant

As we all know, Gemini is going to replace the native Google Assistant across all platforms, including Android phones, Wear OS, Android Auto, and even Android XR, the platform for smart glasses.

The most ambitious feature is Gemini Agent, an experimental capability that handles multi-step, complex tasks directly within the Google ecosystem. This represents the evolution from reactive assistant to proactive partner.

What Gemini Agent Does

- Calendar Management: Schedule meetings, set reminders, coordinate events

- Email Organization: Prioritize messages, draft replies, organize inbox

- Research and Booking: Find information across multiple sources and prepare transactions

- Workspace Integration: Seamlessly connects with Gmail, Calendar, Drive, and other Google services

Gemini Agent actively manages aspects of your digital life while keeping you in full control. This is Google’s vision of a true generalist AI agent.

Google is integrating Gemini 3 immediately into core services:

- Search: Available in AI Mode from day one, providing complex reasoning and dynamic search experiences

- Consumer Apps: Available today in the Gemini app

- Enterprise & Developers: Accessible in AI Studio and Vertex AI

- Google Antigravity: A teased agentic development platform for building agent-based applications

How to Enable Gemini 3?

Gemini 3 Pro began its global rollout on November 18, 2025. Users can access it by selecting “Thinking” from the model selector within the Gemini app. However, not everyone is getting it at the same time.

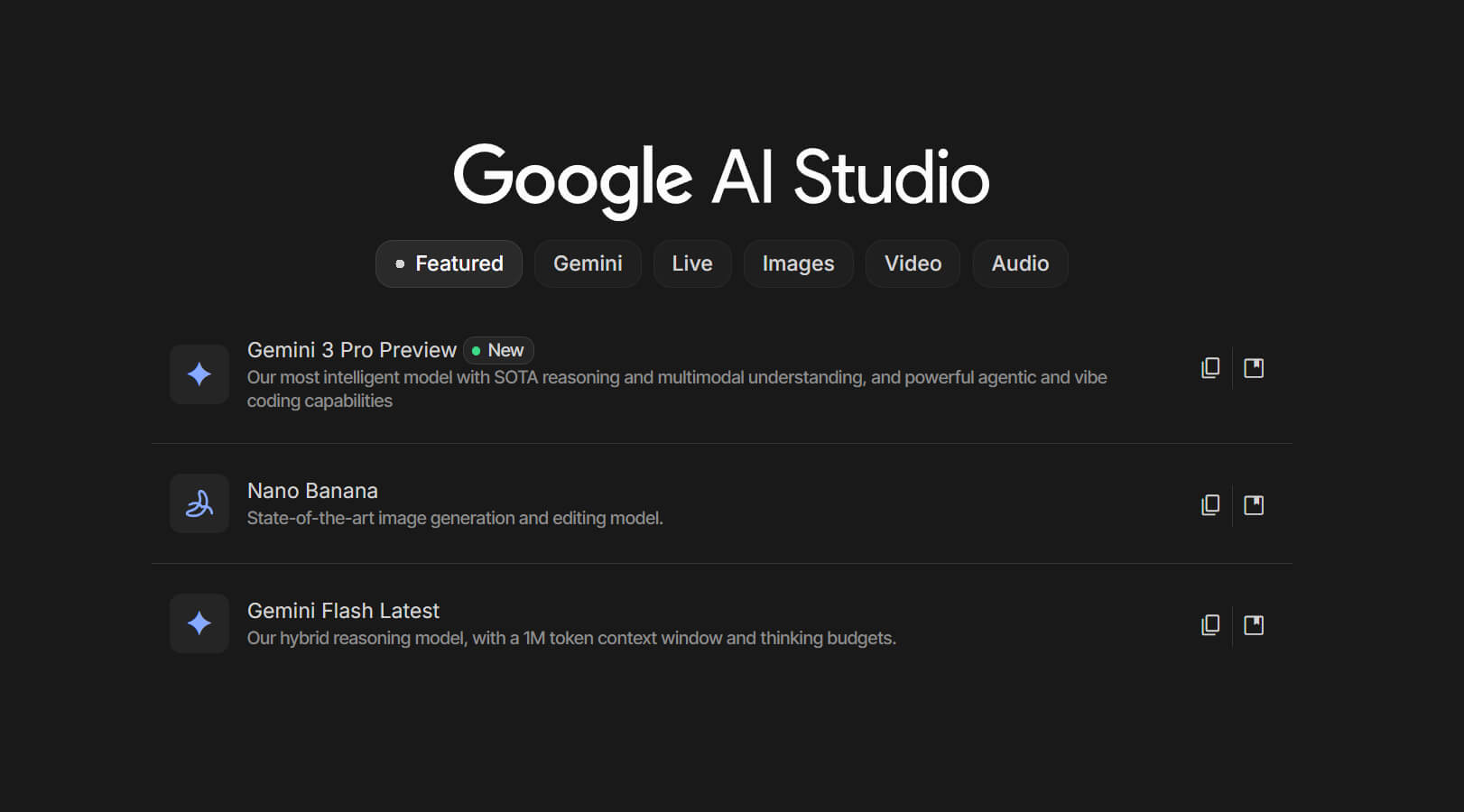

Google AI Studio has Gemini 3 Pro Preview available, and it’s free!

This is Google’s most intelligent model with SOTA reasoning and multimodal understanding, and

powerful agentic and vibe coding capabilities:

- $ <= 200K tokens Input: $2.00 / Output: $12.00 $

- > 200K tokens Input: $4.00 / Output: $18.00

- Knowledge cut off: Jan 2025

Google AI Studio app will be available later this year. Meanwhile, access the website by visiting the link below.